Brands, Don’t Sleep on Snap

Last week at its annual developer summit, Snap made huge strides in positioning itself as the dominant platform for AR.

Let’s start with the numbers. Snapchat has 229 million daily active users, 70% of whom interact or actively use AR lenses. The company reports that 170 million users a day are using AR on Snapchat. That’s more than the number of Twitter users, full stop.

The numbers alone are astonishing. But there’s evidence that AR has the power to not only draw people into a platform, but keep them there. In Q2 of 2019, Snapchat saw a dramatic increase in users (between 7 and 8 million). And what life-altering content brought people rushing to the platform? An AR lens. Specifically, the beautifully simple (and slightly terrifying) Baby Face filter.

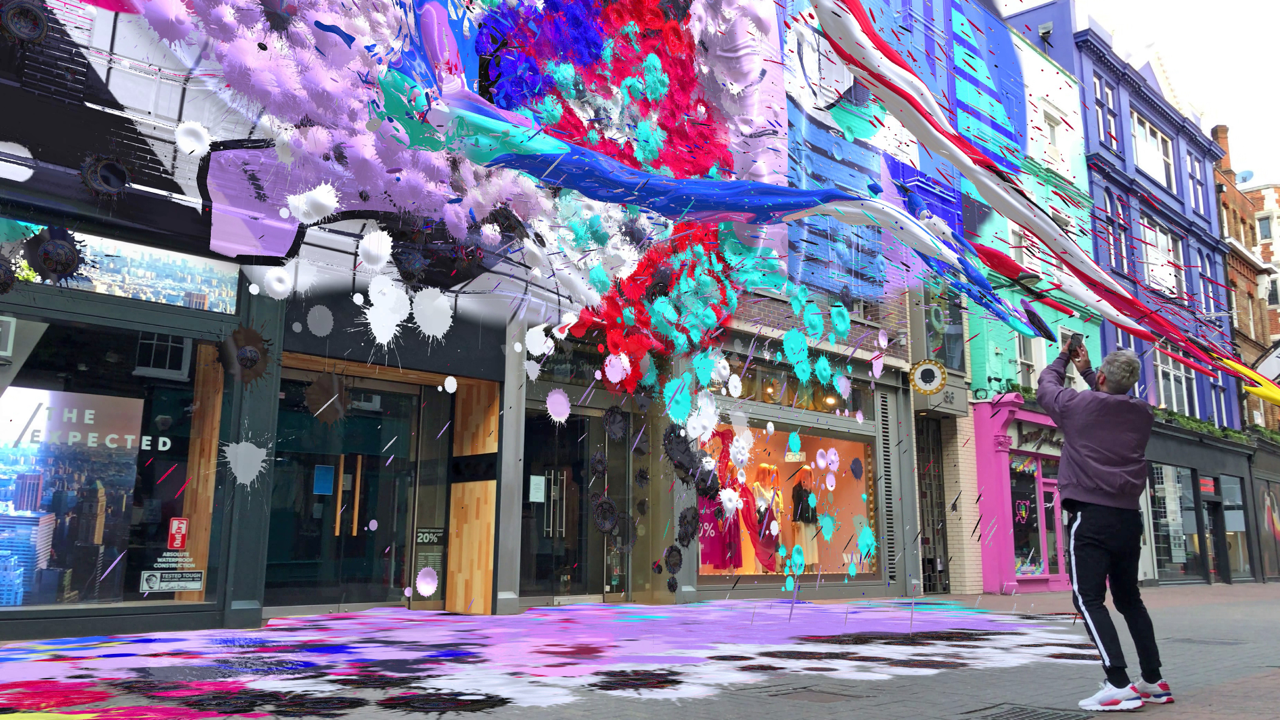

While last week’s announcements like ‘Voice Scan’ and ‘Snap Map’ aren’t as likely to instantly pull in the same numbers, they show very clearly that Snap is betting on a future where AR is the way that humans engage with information. AR will be the window through which we view the world and on which our information is served, first through our mobile devices but ultimately through glasses or other head-mounted displays.

Although Snapchat can be viewed as a frivolous endeavour full of rainbow vomit and puppy filters, dig a little deeper and we can see that it’s paving the way to blossom from a social network into a platform for experiencing the world through AR. Snap started off using fun filters as a way to normalise the behaviour of engaging with AR content, but now it’s getting serious.

Last week’s nod towards commerce was clear: social AR is fast becoming the next point of sale. ‘Try on’ features are becoming increasingly popular and thanks to the new ability for developers to import their own machine learning algorithms into the platform. This means sneaker try-on experiences such as Wannaby’s will become commonplace. When combined with a slick and seamless path to purchase (something Snap also does well), this leads to fantastically entertaining and useful experiences with provable ROI.

Snap’s vision to use AR as a way to overlay information, content and experiences onto the world was also strikingly clear from its ‘Scan’ feature. A ‘press and hold’ function on the screen will open up suggested lenses, contextually served to what’s in front of you. Foreground fauna, for example, will open up PlantSnap to identify 90% of the world’s plants and trees. Whereas a pooch launches ‘Dog Scanner’...which does pretty much what it says on the tin.

Extrapolate this out and you have a method of using the camera, machine learning and augmented reality to contextualise the world around you: product reviews at the drop of a hat, nutritional information in the supermarket aisle and local stockists information triggered from a poster.

Distribution of AR features has always been the thorn in the side for brands who want to create immersive experiences of any kind. In order to balance useful features, stable performance and high quality assets, a downloadable native app is the only legitimate avenue. This means not just investing in the AR feature, but also the development of, or integration with, an app plus media spend to deliver downloads and a well thought-out strategy to ensure that those downloads become active users. What Snapchat is pointing to is an ecosystem with baked-in reach, where brands can experiment and evolve their AR and immersive strategy in increments, with greatly reduced risk and a smaller requirement for unilateral stakeholder buy-in across the business.

The future of AR for brands isn't simply a creative expression, it’s the next generation of storytelling, the new way of engaging with consumers and the future of commerce. The home of the puppy filter may just be the most grown-up representation of this future we have. Sleep on it at your peril.

Rosh Singh (MD) and Adam Mingay (Client Partnerships) @ UNIT9